Hi @cotron.1,

I have looked into this issue for you on the backend computer vision server, and I can confirm two things:

- The HotSpotter ID algorithm was and is using the background subtraction code as expected for the first three leopard matches you posted (4 days ago), and

- The most recent match you posted (4 hours ago) did NOT use the background subtraction code. I have pushed a fix to our open-source repository to address this issue; the fix should be ready and deployed on the Whiskerbook platform by the end of the week. Please re-submit any ID jobs to use the updated code.

For number 1, all of the annotations used for those matches had the species label set as leopard. This is ideal because the computer vision code will automatically apply the appropriate background subtraction model when it sees the species leopard or panthera_pardus. However, as @jason has already described, the challenge is that the background segmentation algorithm is not perfect and will still let through some background from time to time.

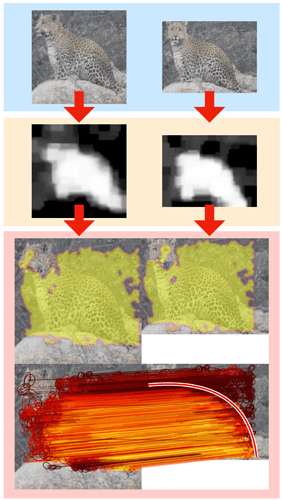

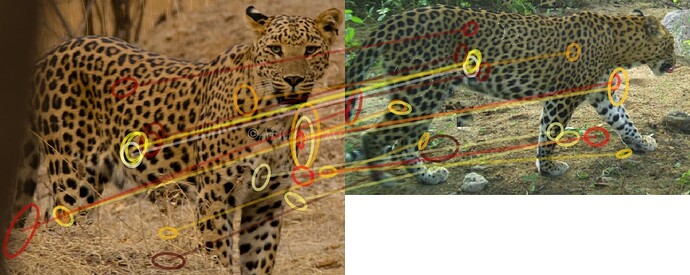

Moreover, the background subtraction algorithm does not blackout the background pixels; instead, it will down-weight them. A very strong background match (e.g., from images taken seconds apart) will sometimes still outweigh a poor foreground match, even after background subtraction has been applied. Below is a perfect example of this case, taken from your original match examples. We can see that the original images (blue box) are given to the background subtraction model, which produces a foreground/background mask (yellow box) where light pixels correspond to “likely leopard” and black pixels to “likely background.” After the mask is generated, the keypoints found by HotSpotter are weighted based on the brightness of the mask pixel area they cover. This is hard to see in the yellow highlighted match visualization (red box, top) because the individual matching keypoints are converted into a heatmap. When we visualize the actual matching hotspots (red box, bottom), we can see an overwhelming correspondence between these two images. This is not surprising as the time between these two images is only a few seconds. What we can see, however, in the bottom visualization is that the color of the individual hotspots (and their correspondence lines) is based on the background mask. We color the ellipses with a bright yellow when they are determined to likely be leopard and dark red color or likely background. I have added a curve overlay to the bottom image to show where the matching keypoints transition from foreground matches to background matches, which predictably follows the curve of the leopard’s spine. HotSpotter visualizations by Dr. Crall.

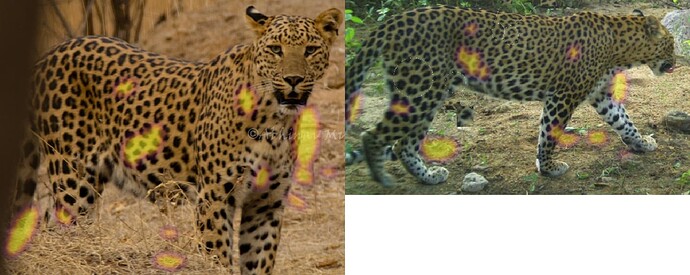

The other matches are clearer to see how the background segmentation is down-weighing background keypoints (dark red color).

For number 2, it appears that those two annotations had the species panthera_pardus_fusca, corresponding to the “Indian leopard” species. While the backend computer vision server has been configured for leopards, it was not configured for that specific species of leopard. I have updated the code to make that change permanent, and all future HotSpotter ID matches will use the pre-existing leopard background model on Indian leopard images.

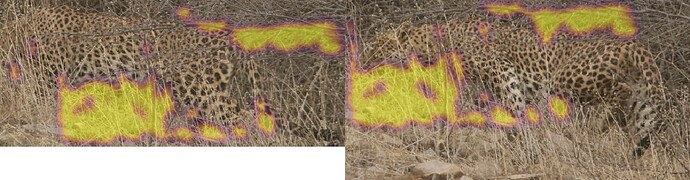

The match you posted is challenging for background subtraction because the leopard is seen behind tall grass. This will likely confuse the background model to a degree, and the matching keypoints will probably focus more on the tree branches, twigs, and grass details. For now, I’ve manually run those images through the leopard background model so you can see what it produces. See below:

Image 1

Image 2

Because the background model was not used for this HotSpotter match, we can see clearly that the background is matching with high confidence (bright yellow keypoints). Unfortunately, this match was not found by comparing the leopard’s body texture at all, but this behavior will improve as the background model is turned on for Indian leopards.