The machine learning (ML) system supports up to 26 possible viewpoints (plus one more for “unknown”): wildbook-ia/constants.py at ac433d4f2a47b1d905c421a36c497f787003afc3 · WildMeOrg/wildbook-ia · GitHub. When the ML models are trained, we look at the distribution of the viewpoints and intentionally collapse some smaller categories into a single bucket in order to generate more examples in total.

For example, this is the code that maps the ground-truth viewpoints we have into the appropriate bucket:

label_two_species_mapping = {

'wild_dog' : 'wild_dog',

'wild_dog_dark' : 'wild_dog',

'wild_dog_light' : 'wild_dog',

'wild_dog_puppy' : 'wild_dog_puppy',

'wild_dog_standard' : 'wild_dog',

'wild_dog_tan' : 'wild_dog',

'wild_dog+' : 'wild_dog+tail_general',

'wild_dog+____' : 'wild_dog+tail_general',

'wild_dog+double_black_brown' : 'wild_dog+tail_multi_black',

'wild_dog+double_black_white' : 'wild_dog+tail_multi_black',

'wild_dog+long_black' : 'wild_dog+tail_long',

'wild_dog+long_white' : 'wild_dog+tail_long',

'wild_dog+short_black' : 'wild_dog+tail_short_black',

'wild_dog+standard' : 'wild_dog+tail_standard',

'wild_dog+triple_black' : 'wild_dog+tail_multi_black',

}

label_two_species_list = sorted(list(set(label_two_species_mapping.values())))

label_two_viewpoint_mapping = {

species: {

None : None,

'unknown' : None,

'left' : 'left',

'right' : 'right',

'front' : 'front',

'frontleft' : 'frontleft',

'frontright' : 'frontright',

'back' : 'back',

'backleft' : 'backleft',

'backright' : 'backright',

'up' : 'up',

'upfront' : 'upfront',

'upleft' : 'upleft',

'upright' : 'upright',

'upback' : 'upback',

'down' : 'down',

'downfront' : 'down',

'downleft' : 'down',

'downright' : 'down',

'downback' : 'down',

}

for species in label_two_species_list

}

for viewpoint in label_two_viewpoint_mapping['wild_dog_puppy']:

label_two_viewpoint_mapping['wild_dog_puppy'][viewpoint] = 'ignore'

We can see in the above mapping that down, downfront, downleft, downback, and downright are all mapped to a single down viewpoint. The reason we do this is because the model will have too few examples of downfront compared to the overwhelming examples of left and right to properly train the model. This mapping only applies to the automated outputs of the ML models that predict viewpoint, but this restriction should not be a problem in Wildbook’s interface. We may need to make a ticket in Wildbook to allow this feature in the interface, separate from what the ML system is producing.

We also need to quickly clarify the terminology that is used by the ML system. We have 6 possible primary viewpoints: front, back, left, right, up, down. These viewpoints are defined where it is the answer to the question, “what side of the animal are you looking at?” For example, if you are seeing the underneath belly of the animal, you are seeing the bottom of the animal, and therefore the viewpoint is “down”. At first, you’ll ask why we use “up” and “down” instead of “top” and “bottom”. We do this for a very specific and boring reason: abbreviation. If we use up and down, we can abbreviate all of the 6 primary viewpoints as F, B, L, R, U, and D. If we used “top” and “bottom”, there would be a conflict between “back” and “bottom” when we abbreviate, so we use “down” instead.

Beyond the 6 primary viewpoints, we can have secondary viewpoints as well as the combination of two primary viewpoints. There are also tertiary viewpoints that are the combination of three of the primary viewpoints. For example, the viewpoints front-right (or FR) is the viewpoint seeing the front of the animal and the right side of the animal. A viewpoint of DBR (or down-back-left) is a viewpoint where you see the bottom, back, and left-side of the animal all at the same time. In general, tertiary viewpoints are fairly rare and we almost exclusively use only primary and secondary viewpoints in all of our ML models.

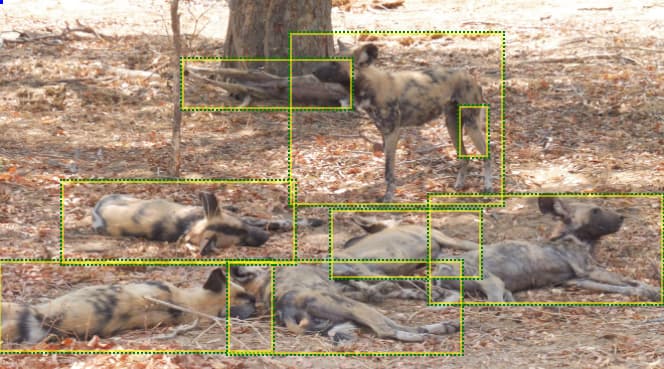

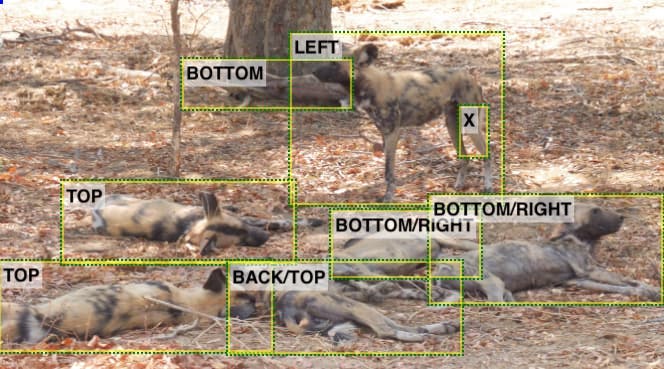

Going back to the original example image, there are only one animal where only the bottom is being seen. Below are my annotated viewpoints for each of the boxes:

I agree that the Wildbook platform can add the ability to annotate viewpoints beyond the ones produced by the ML models. We will likely cross this threshold after the migration to the new Codex framework.